Importance of Mathematics in Big Data

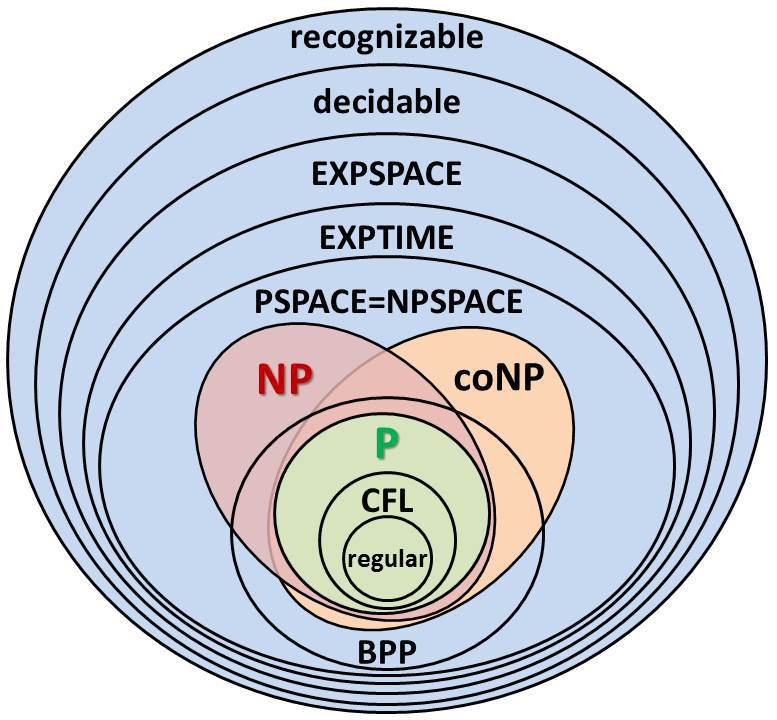

In the last decade massive datasets are constantly generated by Science and the Internet to be used for hypothesis testing or for exploratory purposes. However, these datasets can be so large and complex, that it is infeasible to process them with traditional techniques. Polynomial complexity, which is often sufficient for a lot of computer science projects, is however prohibitive when working with big data. There, due to computational constraints, we often have to look for algorithms with sub-linear complexity in time and space. We will advocate about the importance of mathematics in this field through significant paradigms of their use that lie at the core of the field.

Lowering the complexity of techniques beyond traditional bounds often demands the use of randomness and approximation. This compromise is often necessary, as we can prove theoretical bounds for non-randomized and/or non-approximate algorithms. Therefore, we need to use mathematical tools from probability theory, like concentration inequalities, to be able to analyze and design these types of algorithms. Ideas from analysis should also be used in tandem to generate tight approximation bounds. Furthermore, in my opinion randomness and approximation lie at the very heart of the algorithmic side of this field. Subjects like Randomized Linear Algebra are gaining increasing popularity because of their demand in the modern era of big data.

In general, one of the main reasons for the creation of these large datasets is to make estimations and model an overall distribution. Here, in contrast to the algorithmic view point mentioned above, data can be viewed as a resource. Processing more data samples may be computationally expensive, but their use can also lead to a better approximation of the assumed underlying probability distribution. This is in my opinion a very interesting and fundamental tradeoff. For example, when learning by optimizing an expected error over a family of functions, the overall error can be decomposed into error from the approximation of the expectation and error from the optimization process. Hence, we can balance the total error by increasing the number of samples and using a coarser but faster optimization process on the given time budget [1]. This important idea, that more data can lead to simpler algorithms, as asymptotic results can be invoked, brings together computational and statistical thinking. Mathematical results are needed for exploiting this concept and handling the tradeoff [2].

It is often said that the most important aspect of big data is not the number of data points but their dimension, complexity. When learning with high dimensional data, we often have exponential complexity with respect to the number of dimensions, large statistical complexity and incomprehension when performing analytical tasks. However, it is observed that while modern datasets are generated with a high number of dimensions, they often adhere to an intrinsic low dimensional structure. In order to remedy these curses, the key question we need to ask is: Is there any low-dimensional structure in the data, and how could we exploit this structure algorithmically? Answering these kinds of questions gives birth to the design of dimensionality reduction methods. This is a very mathematically demanding subject, that has its roots in functional analysis, and requires knowledge from many different fields. For example, proving the Johnson-Lindenstrauss lemma and designing its variant transforms uses tools from probability theory, ideas about sparsity, random matrix theory, and leverages the Fast Fourier Transform [3],[4],[5]. While tools from “pure” mathematics such as, algebraic topology, are also used in the new popular subject of Topological Data analysis [6].

Furthermore, usually the complexity of the data increases in together with the number of samples over the lifetime of an application. So much so, that in a lot of cases the dimension can be in the same or higher order with the dataset size. As a result, classical statistical theory fails to be consistent with empirical results because, it does not take this fact into account. Instead, we need to use results from a modern area of mathematics called high-dimensional statistics and probability in order to correct the error bounds and get a truer analysis [7]. This field helps us understand high-dimensional datasets and should be at the toolkit of every researcher in the field of big data.

For a more detailed example of the above concepts, I personally use some of these principles in my diploma thesis. The subject of my diploma thesis is the design of a massively parallel implementation of the t-SNE algorithm on the GPU and exploring its use in embedding large sparse graphs. t-SNE [8] is a dimensionality reduction method for data visualization that has been greatly influenced by the use of mathematics both in a theoretical ,design, level and an algorithmic level. Starting despite its popularity, the algorithm is based on a non-convex optimization process and the use of two user defined parameters. Later Theoretical proofs that it preserves clusters, with some metric definition of clustered data, were developed, cementing its use, verifying the empirical observations and finding optimal values for these parameters [10]. These proofs use tools from probability theory like the Berry-Esseen Theorem and techniques from dynamical systems to analyse the convergence of the optimization method. On the level of the performance optimization of the algorithm, great advancements have been made in order to turn the initial quadratic and later O(nlogn) [9] complexity to linear in, every descent iteration [11]. Through approximations, it is revealed that a sparse representation can be used, making linear algebra algorithms more efficient. Also the algorithm uses a spline decomposition of a matrix/tensor and as a result the recurrence computation can be accelerated by the use of the Fast Fourier Transform.

Ending, big data is a new and very active field that introduces mathematical challenges in theoretical, algorithmic and method design and statistical levels. New researchers need to have an operational command of all those disciplines as they often need to be used together in the context of many problems of this field.

References:

[1] Léon Bottou and Olivier Bousquet: The Tradeoffs of Large Scale Learning

[2] Venkat Chandrasekaran, Michael I. Jordan: Computational and Statistical Tradeoffs via Convex Relaxation

[3] Johnson William B., Lindenstrauss, Joram: Extensions of Lipschitz mappings into a Hilbert space

[4] Ailon Nir, Chazelle Bernard: Approximate nearest neighbors and the fast Johnson–Lindenstrauss transform

[5] Kane Daniel M., Nelson Jelani: Sparser Johnson-Lindenstrauss Transforms

[6] Frédéric Chazal, Bertrand Michel: An introduction to Topological Data Analysis: fundamental and practical aspects for data scientists

[7] Martin J. Wainwright: High-Dimensional Statistics: A Non-Asymptotic Viewpoint

[8] Laurens van der Maaten, Geoffrey Hinton: Visualizing Data using t-SNE

[9] Laurens van der Maaten: Accelerating t-SNE using Tree-Based Algorithms

[10] Sanjeev Arora, Wei Hu, Pravesh K. Kothari: An Analysis of the t-SNE Algorithm for Data Visualization

[11] George C. Linderman, Manas Rachh, Jeremy G. Hoskins, Stefan Steinerberger, Yuval Kluger: Efficient Algorithms for t-distributed Stochastic Neighborhood Embedding